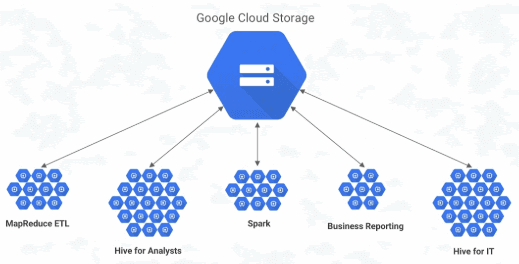

Cloud Dataproc is the Best Cloud service for Running Apache Spark and Apache Hadoop clusters in a simple way. Methods that are used to take days, in this case this method was replaced by payment method. You can pay only for the resources you implement to use. Cloud DataProc works with another Google cloud Platform services. This Services will Give you a good interface for machine learning, analytics and Data Processing. As a matter of Fact it explains Cloud Dataproc made easy by Hadoop.

Speed and Measurable Data Processing :-

Design cloud DataProc clusters faster and you have to reshape them at any time from three to so many hundreds of nodes. So there is no need to think about your Data Pipelines that develop big your clusters. With every cluster work taking less time on average. And you have much time to target on Results with less time to loose Architecture and Cloud Dataproc made easy by Hadoop.

Cloud Dataproc made easy by Hadoop :-

By making use of Google Cloud online course Platform basics. Cloud Dataproc has a minimum price and a simple to think cost Design and based on exact use that was scaled by the second. Cloud Dataproc Clusters can contain low cost Examples. This will provide you high end clusters at lower total cost. This is the part of google Cloud Dataproc.

The Spark and Hadoop network gives you Documentation and tools that you can change with Cloud DataProc. By providing regular versions of Hive, Pig, Hadoop, and Spark. You can also work with APIs or New updated tools.

Ultimate cluster Management. It have managed movement, logging and Monitoring System. It will let you to target on your data and you’re clusters will be Constant. Reshape Clusters, your clusters can be Designed and measured quickly with a method of virtual machine types Disk sizes and nodes and few type of Networking choices and Cloud Dataproc made easy by Hadoop.

Starting of Actions:-

Run starting actions to Install or make sure the settings and libraries you required when your cluster is Designed. Design in Action with Cloud storage, Big Query, Bigtable and stack Driver logging and stack Driver monitoring. That gives you total and Robust Data Architecture.

Image updating allows you to switch between many types of version like Apache Spark and Apache Hadoop and other Tools. Programmer Tools , you have multiple ways to handle a cluster containing an easy to use web UI the Google Cloud SDK,SSH Access and RESTFUL APIs. These are Included in Google cloud Dataproc use cases.

Manual Completion, cloud DataProc automatically Configure hardware and software on clusters for you when accepting for manual Control. Elastic Virtual Machines clusters can implement Custom machine methods and temporary Interrupting virtual Machines so they are exactly suitable for your requirements. Operate clusters with so many master nodes and Set jobs that are more available and Cloud Dataproc made easy by Hadoop.

Bigtable:-

When we are designing a Hadoop cluster you can use Cloud DataProc to create one or more Compute Engine Instances. This have option Connect to a cloud Bigtable example and operate Hadoop jobs. This page explains how to Implement Cloud DataProc the following works.Installing Hadoop,Hbase Adapter and cloud Bigtable. After Designing your Cluster to operate hadoop Jobs that read and Write Data from Cloud Bigtable.

Especially The Best method to manage Cloud Bigtable is to implement cbt Command-line Tool. This page will explain how to Implement Cbt to Design and Change and terminate tables and you have to Information about tables. The Cbt Tools supports so many commands Cloud Dataproc made easy by Hadoop.

Transferring Data as serial Files, here we will see how to transfer a table from H base or cloud Big tables as sequence of Hadoop administration online course sequence Files. If you are going from H base, you can transfer your table from H base and Import table into cloud Big table and send back that table to Cloud Big table. When you transfer a table, you have to Record the list of column families in which the table uses.

Recommended Audience:

Software developers

Team Leaders

Project Managers

Database Administrators

Prerequisites:

In order to start learning Hadoop Administration no prior requirement was needed. Its good to have knowledge of any technology required to learn Big Data Hadoop and also need to have some basic knowledge of java concept. It’s good to have a knowledge of Oops concepts and Linux Commands.