Logs are the source of stress in most of the companies. Today logs were necessary for many of the companies. Logs were painful to the operations team, as they occupy a large amount of space. There logs were present rarely on the disk. The Big companies usually wing up the log and process it and store in a repository other than disk for effective retrieval to both operations team and developers. This frustrates the both developers and operations team, as they were not present at their current location, to access them at the time of necessary. To overcome this problem, Apache Flume was designed.

Apache Flume:

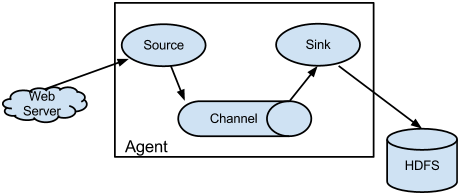

Generally Apache Flume is a tool designed for efficiently collecting large amounts of streaming data in a Hadoop Distributed File System (HDFS) in a distributed environment. This tool was designed to address the problems of both developers and operations team, by providing a tool, where they can push logs from application servers to various repositories via high configurable agent. A flume agent is responsible for intake of huge amount of data from different resources like Syslog, netcat and JMs . Data passed by a flume agent is passed to a sink using a commonly used distributed file systems like Hadoop. Multiple flume agents were connected together by connecting the source of one agent to the sink of another .

Get more information at Big Data Hadoop Training

The Work Flow of flume is depends upon the two components. 1. The master acts like reliable configuration service which is used by nodes for retrieving their configuration 2. The configuration of a particular node is changes dynamically by the master, when the configuration of a node changes.

||{"title":"Master in Big Data Hadoop ","subTitle":"Big Data Hadoop Training by ITGURU's","btnTitle":"View Details","url":"https://onlineitguru.com/big-data-hadoop-training.html","boxType":"reg"}||

A node in Hadoop flume is generally a connector, which is responsible for transfer of data between source and sink. Especially characteristic and role of Flume node is determined by the behavior of Source and sink. If none of the requirements match then, they can define their own by writing the code as per their requirements. A flume node configured with the help of Sink detector.

Architecture:

The architecture of flume tool is simple. It contains 3 important roles:

Source : It is responsible for the data coming into the Queue /File.

Sink: It is responsible for data out flow of the Queue / File.

Channel: It is responsible for the connection between sources and sinks.

Workflow : The work Flow of Flume described with the help of following diagram

Before going to know about the data flow, lets discuss the following concepts:

Log Data : The data will be coming from various data resources like application servers , cloud Servers and enterprise servers needs to analyzed . The produced data will be in the form of log file and events. The data in the log file known as log data.

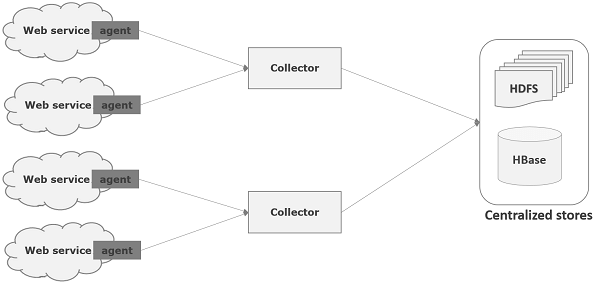

In particular Flume a framework, which used to move data into HDFS . In general , log servers generates events and logs and these servers have flume agents running on them. These Flume agents is responsible for receiving of data from data generators.

For example a Collector a intermediate node , where the data from multiple data sources collected . Like multiple agents, they can be multiple collectors. All the data in collectors will stored in a centralized store called HDFS.

Features:

- It is a tool to scale data in environments with minimum of 5 machines to thousands of machines.

- Stream oriented, fault tolerant and linearly scalable.

- Provides low latency and high through put

- Provides the ease of extensibility.

- Along with log files, Flume also used to import data from Social Media sites like Facebook, Twitter

- It intakes the data from multiple sources and stores efficiently.

Recommended Audience : Software developers ETL developers Project Managers Team Lead’s Business Analyst

Prerequisites : Prerequisite for learning Big Data Hadoop .Its good to have a knowledge on some OOPs Concepts . But it is not mandatory . Trainers of OnlineITGuru will teach you if you don’t have a knowledge on those OOPs Concepts

Become a Master in Flume from OnlineITGuru Experts through Big Data Certification