Draw Backs of Hadoop:

Hadoop is a open source distributed file system for processing large volumes of data in a sequential manner. But with this sequential manner, if the user want to fetch the data from the last but one row, he need to search the all the rows from the top. It can be done when the data small, but if the data is large it take more time to fetch and process that particular data. To overcome this problem, we need a solution, HBase can provide solution for it .

HBase:

HBase is a open source, highly distributed NO Sql, column oriented database built on the top of Hadoop for processing large volumes of data in random order. One can store data in HDFS either directly or through HDFS. HBase sits on the top of Hadoop system to provide read write access to the users. Data consumers can read/ access the data randomly through HBase.

Get more information at Big Data Hadoop Online Training in Hyderabad

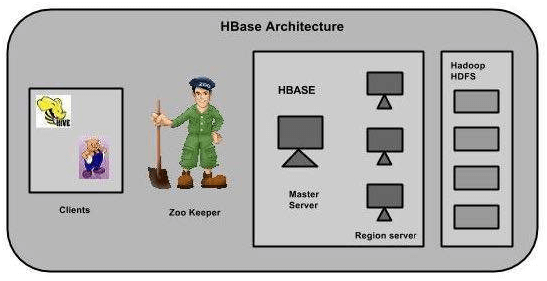

Architecture:

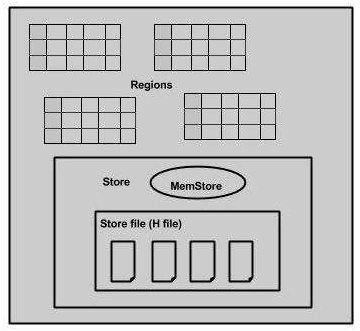

In HBase, tables were split into regions and are served by region servers. Regions were vertically divided by column families into stores. Stores contains these files in HDFS.

HBase contains three major components namely Master server, client library and Region server. Region servers can be added or removed as per requirements. Let us discuss its architecture in detail.

Master server:

Assigns regions to the regions servers and takes the help of Apache Zoo keeper for this task. Below are the responsibilities of Master Server:

Coordination of region servers :

It assigns regions to the new servers and also reassigns the regions to the existing servers .

It monitors all the regions server instances in the cluster

Admin functions :

It is an interface for creating , inserting and updating the tables in the Data base.

Region server:

Generally Hbase tables are divided horizontally by row key range into regions . A regions contains all rows in the table between regions start key and end key These region server contains regions that has following responsibilities:

Region server communicates with the client and handle data related operations. Handles read and write request for all the regions under it. Decide the size of the region following the region size thresholds.

Use Cases :

Log analytics :

HBase comes with out of box integration for with Map Reduce Concept. To illustrate It also optimized for sequential scans and read , which makes well suited for batch analysis of log data.

Capturing metrics :

Many organizations have turned to Hbase for operational data store to capture various real time metrics from their applications and servers. Its column oriented data model makes Hbase a natural fit for persisting these values.

||{"title":"Master in Big Data Hadoop ","subTitle":"Big Data Hadoop Training by ITGURU's","btnTitle":"View Details","url":"https://onlineitguru.com/big-data-hadoop-training.html","boxType":"reg"}||

Features:

Deep integration with Apache Hadoop: To illustrate As HBase built on the top of Hadoop; it supports palatalized processing via Map Reduce. HBase used as both input and output for Map Reduce jobs .Integration with Apache Hive allow users to query HBase tables using Hive Query Language which is similar to SQL.

Strong Consistency: In particular this project has made strong consistency of reads and writes. At the same time a single server in an HBase cluster is responsible for subset of data and with atomic row operations to ensure consistency.

Failure Detection: Especially When the node fails, Moreover HBase automatically recovers the write in progress and edits that have not flushed. In addition It reassigns the region server that was handling the data set , where the node failed.

Real time Queries: Furthermore By using the configuration bloom filters , block caches and log structured merge trees for efficiently store and query data . In particular it provides random real time access to its data.

Applications:

Generally It used, whenever there is a need to write heavy applications. For instance It used, whenever you need to provide fast random access to available data.

Recommended Audience :

Software developers

ETL developers

Project Managers

Team Lead’s

Business Analyst

Prerequisites:

There is nothing much prerequisite for learning Big Data Hadoop .It’s good to have a knowledge on some OOPs Concepts . But it is not mandatory .Our Trainers will teach you if you don’t have a knowledge on those OOPs Concepts Become a Master in HBase from OnlineITGuru Experts through Big Data Hadoop online course