Before going to know what HDFS is, let’s know the drawbacks of Distributed File systems

A File System is a method and Data Structures that an operating system keeps track of files or partition to store the files.

Drawbacks of Distributed File System:

A Distributed file system stores and processes the data sequentially

In a network, if one file lost, entire file system will be collapsed

Performance decrease if there is an increase in number of users to that files system

To overcome this problem, HDFS was introduced

Get in touch with OnlineITGuru for mastering the Hadoop online Training

Hadoop Distributed File System:

HDFS is a Hadoop distributed file system which provides high performance access to data across Hadoop Clusters. It stores huge amount of data across multiple machines and provides easier access for processing. This file system was designed to have high Fault Tolerant which enables rapid transfer of data between compute nodes and enables the hadoop system to continue if a node fails.

||{"title":"Master in Big Data Hadoop ","subTitle":"Big Data Hadoop Training by ITGURU's","btnTitle":"View Details","url":"https://onlineitguru.com/big-data-hadoop-training.html","boxType":"reg"}||

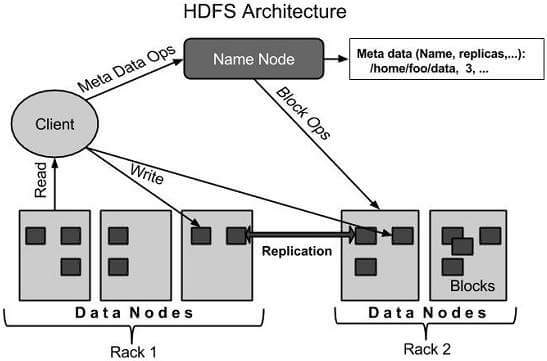

When HDFS loads the data, it breaks the data into separate pieces and distributes them across different nodes in a cluster, which allows parallel processing of data. The major advantage of this file system each copy of data is stored multiple times across different nodes in the cluster. It uses MASTER SLAVE architecture with each cluster consisting of single name node that contains a Single Name Node to manage File System operations and supporting Data Nodes to manage data storage on individual nodes.

Architecture of HDFS:

Name Node: It is a commodity hard ware which contains Name Node software on a GNU/Linux operating system . Any machine that support JAVA can run Name Node or Data Node .The system which having the name node acts a master server and does the following tasks

It Executes File system operations such as renaming , closing , opening files and directories .

Request client access to files

Manages file system namespace

Data Node: This is also a commodity hardware containing data node software installed on a GNU/Linux operating system . Every node in the cluster contains the Data Node. This is responsible for managing the storage of their system.

It Perform read - write operations of the file system as per client request.

The operations performed by the Data Node are block creation,deletion and replication according to the instructions of the name node.

Block:

Data is usually stored in the form of files to HDFS. The files which stored in HDFS divided into one or more segments and stored in individual data nodes .These file segments known as blocks. The default size of each block is 64 MB which is the minimum amount of data that HDFS can read or write.

Replication : The numbers of backup copies for each data node .Usually HDFS makes a 3 replica copies and its replication factor is 3

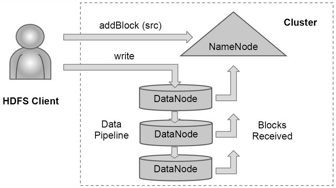

HDFS New File Creation: User applications can access the HDFS File systems using HDFS client , which exports the HDFS file system interface .

When an application reads a file, the HDFS ask the Name Node for the list of available Data nodes. The Data nodes list here is sorted by network Topology. The client directly asks the data node and requests for the transfer of desired block. When the client writes the data into the file it first asks the Name node to choose Data node to host replicas for the first block of file. For example When the first block filled, the client request new data nodes to chosen to host replicas of the next block.

The default replication factor 3 and can changed based upon the requirement.

Features of HDFS:

Specifically Streaming access to file data system In particular Suitable for Distributed storage and processing Provides a command interface for HDFS interaction. Built in Servers of data node and name node which helps end users. Get in touch with OnlineITGuru for mastering the Big Data Hadoop Course

Recommended Audience:

Team Lead’s

ETL developers

Software developers

Project Managers

Prerequisites:

In order to start learning Big Data has no prior requirement to have knowledge on any technology required to learn Big Data Hadoop and also need to have some basic knowledge on java concept.

Its good to have a knowledge on Oops concepts and Linux Commands.