Transferring GB’s and TB’s of data into Hadoop cluster is a challenging task .While transferring we need to consider certain factors like data consistency. In this Scenario, there may be a loss of data during transfer. So we need a tool for transferring this bulk amount of data. The solution to this problem is given by Apache Sqoop.

Get in touch with OnlineITGuru for mastering the Big Data Hadoop Training

Sqoop:

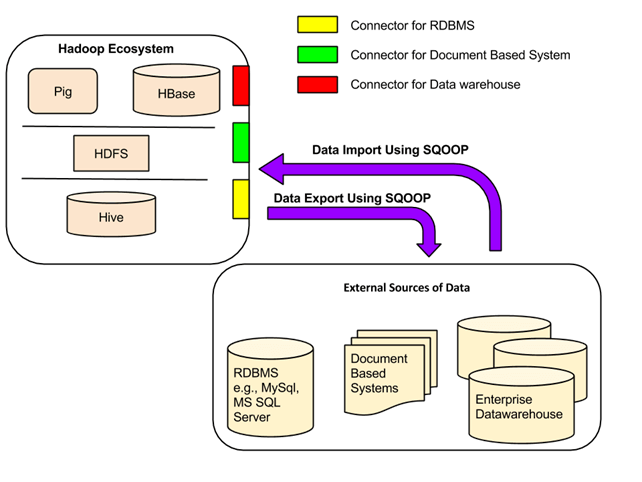

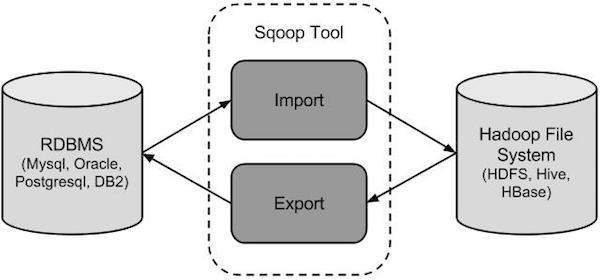

Apache sqoop is a tool designed for transferring bulk amount of data between Apache Hadoop and Distributed File system or other Hadoop eco systems like Hive and HBase. Sqoop acts as a intermediate layer between Hadoop and Relational data bases. Similarly Sqoop can also be used to extract data from relational data bases like Teradata, Oracle, and Mysql. Sqoop uses Map Reduce to fetch data from RDBMs and stores that data into HDFS . By Default it uses four mappers which can changed as per requirement. Sqoop internally uses JDBC interface so as to work with any compact-able database.

Automates most of the process, It depends on the data base to describe the schema of data to be imported. Sqoop makes the developers easy by providing a command line interface. To the Sqoop tool , Developers need to provide the parameters like source , destination and data base authentication in the sqoop command . The rest of the things will taken care by sqoop.

Work Flow :

The Sqoop can export / import the data between Data bases and Hadoop .

Data Export:

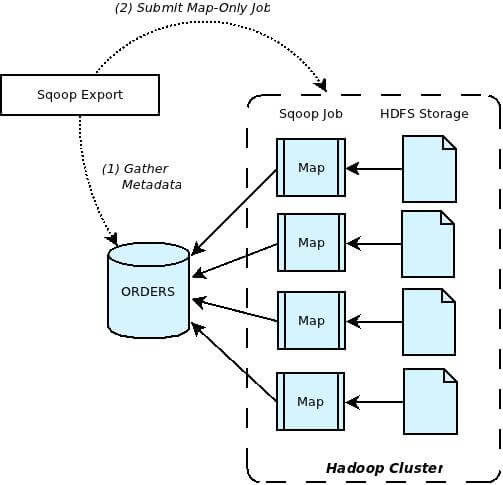

Data Export in Hadoop is done in two steps :

The first step is to introspect the database for meta data followed by the second step of transferring the data. Sqoop divides the input dataset into splits and then uses the individual map task to push the splits into the data base. Each map performs this task inorder to ensure the minimal resource utilization and optimal throughput.

Data import :

Sqoop parses the argument provided in the command line and prepares a Map job. Map job launches multiple mappers depending upon the number of mappers defined in the command line . For Sqoop import each mapper assigned with a part of data to imported , defined in the command line. Sqoop distributes the input data among mappers equally to get high performance . Each mapper creates a connection with the data base using JDBC and fetches a part of data assigned by the sqoop and writes into HDFS ( or ) Hive ( or ) HBase based on the option provided in the command line .

||{"title":"Master in Big Data Hadoop ","subTitle":"Big Data Hadoop Training by ITGURU's","btnTitle":"View Details","url":"https://onlineitguru.com/big-data-hadoop-training.html","boxType":"reg"}||

Working :

It is an effective tool for programmers which functions by looking at the databases that need to the imported and selecting an relevant import function for the source data. Once the input recognized by the hadoop , the meta data for the table read and the class definition created for the input requirements . Hadoop Sqoop forced to function selectively by just getting the columns needed before input instead of importing the entire input and look for the data in it . This saves the amount of time considerabily. In real time import from data base to HDFs accomplishes by a Map Reduce Job Which created in the background by Apache Sqoop.

Scoop connectors:

All the existing data bases designed with SQL standard in mind. However Each DBMS differs with respect to the language to some extent . So this difference posses some challenges when it comes to data transfers between the systems. Sqoop provides a solution with Sqoop connectors. Data transfer between sqoop and external storage system is possible with sqoop connectors.

Sqoop has connectors for working with a range of popular relational data bases like MySQL, Oracle, DB2, SQL Server . It also contains a generic JDBC connector for connecting to any data bases that supports Java JDBC protocol. It provides Postgre SQL and optimized SQL connectors which uses data base specific API’s to perform bulk transfers efficiently.

Recommended Audience :

Software developers

ETL developers

Project Managers

Team Lead’s

Business Analyst

Prerequisites : There is nothing much prerequisite for learning Big Data Hadoop .Its good to have a knowledge on some OOPs Concepts . But it is not mandatory .Our Trainers will teach you if you don’t have a knowledge on those OOPs Concepts Become a Master in Sqoop tool from OnlineITGuru Experts through Big Data Hadoop online course