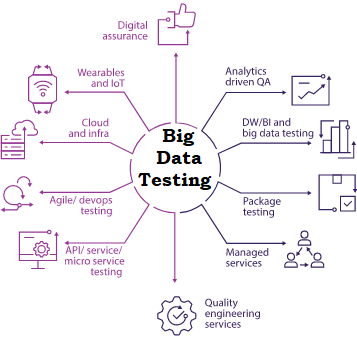

The testing of these data sets includes different devices, systems, and designs to process. Big data identifies with data creation, storing, recovery and study that is amazing in the point of volume and Velocity.

The testing of these data sets includes different devices, systems, and designs to process. Big data identifies with data creation, storing, recovery and study that is amazing in the point of volume and Velocity.In this blog, we will learn the Techniques and Terminology behind this Testing.

1.Keys of Big data testing:

2.Big Data Project Testing:

3. How to test Big Data Applications:

4.Challenges in Big Data Testing:

Now I will explain Every technique in Detail:

For More Big Data Testing Methods you can go through our live video at Big Data Online Training

1.Keys of Big data testing:

Testing Big Data apps is like more examination, of its data deals as opposed to check the unique highlights of the software. When we consider it's, performance and Functional testing are the keys.

2. How to test Big Data Applications:

a)Data Staging Validation:

1.The initial step, that it additionally pointed to as the pre-Hadoop step includes process approval.

2. Data from different sources like RDBMS, weblogs, Social Media, and so on approved to ensure the Right data that kept into the framework

3. Analyzing source data and the data pushed into the Hadoop framework to ensure they coordinate.

4. Confirm the correct data is separated and uploaded into the right HDFS location.

5. Tools like Talend, Datameer, can be used for data sort approval.

b)MapReduce Validation:

The Second Step is the approval of "MapReduce". In this stage, the analyzer checks the business logic approval on each node and afterward approving them secondly to run against many nodes, guaranteeing that the map Reduce process works effectively.

Data Aggregation rules are executed on the data.

Key-Value sets Designed.

Approving the data after the Map-Reduce process done.

c)Output Validation Phase:

The last or third phase is the output approval process. The output data files produced and fit to move to an EDW (Enterprise Data Warehouse) or some other framework based on the need.

d)Activities in the third stage contain:

To make sure Transforming rules that Exactly Implemented.

It Examines the data Integrity and Successful data load into the Main framework.

To watch that there is no data corruption by variating the objective data and the HDFS.

3.Big Data Testing:

a)Architecture Testing:

Hadoop compiles big volumes of data. Thus, Architectural testing is significant to guarantee the achievement of your Big Data Project. An un-planned framework may prompt execution corruption, and the framework could neglect to meet the Requirement.

In any event, Performance and Failover test works have to be made in Hadoop status.

b)Performance Testing:

Performance Testing for Big Data has two key actions, that is Data Ingestion and Data Processing.

c)Data Ingestion:

In this stage, the analyzer checks how the quick framework can take data from the different data sources. Testing includes note an unexpected message in comparison to the work can process in a given time.

It Includes how fast data can be attached, to the fundamental data store for instance insertion rate into a Mongo and Cassandra database.

d)Data Processing:

It includes checking the speed with which the questions or Map Reduce works executed. It contains testing the data process in separation when the vital data store lives inside the data index.

For Instance, running Map-Reduce works on vital HDFS.

E)Sub-Component Performance:

These frameworks are fixed with different segments, and it is basic to test each of these segments in isolation. For instance, how quickly the message recorded and taken, Map Reduce jobs, query execution, search, and so on.

4.Challenges:

a)Automation:

Automation testing for Big data requires anyone with specialized experience. Like, an automated tool not prepared to deal with sudden issues that occur during testing.

b)Virtualization:

It is one of the key phases of testing. The virtual machine makes timing issues in practical data testing. Additionally handling pictures in Big data is a big problem.

c)Large Data Set:

We need to confirm more data and need to do it quicker.

Need to automate the testing efforts.

Should have the option to test crosswise over various platforms.

Finally, these are the best known and Implemented concepts of Big Data, in upcoming blogs we will update more Data on this testing process.