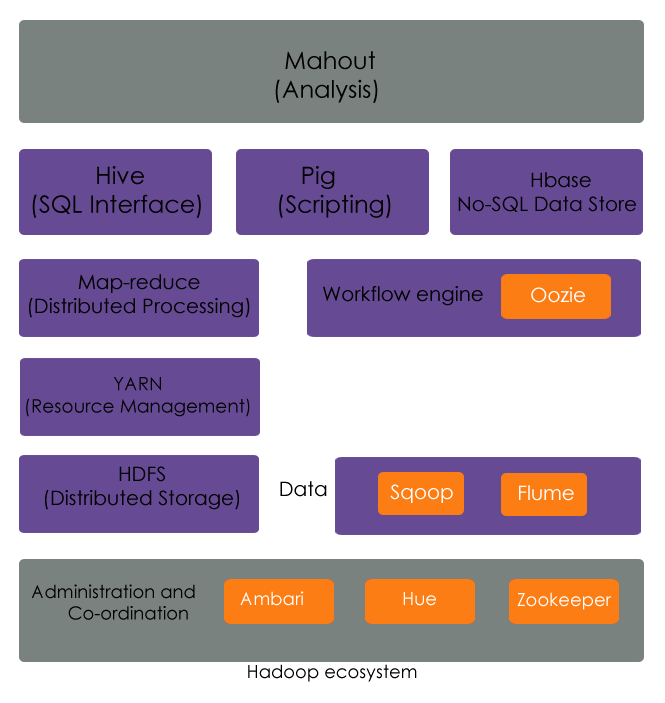

The Hadoop Ecosystem is basically a family of many related project other than HDFS and Map-reduce for distributed storage and processing of Big data.

Majority of these software projects are hosted by Apache Software Foundation.

Below figure is a diagrammatic representation of Hadoop ecosystem

Let’s have a brief overview of the Hadoop eco-system components

A) Data transfer components

Hadoop deals with structured, semi-structured and unstructured data. For the transfer of datain and out of Hadoop there are two Hadoop eco-system components Sqoop and Flume.

Sqoop:

It is basically a tool for efficiently moving large amount of structured data between HDFS and relational database such as Oracle, SQL Server , MySQL etc.

Sqoop basically has an import and export utility to move data in and out of Hadoop.Sqoop sq comes from SQL and oop comes from Hadoop.

Flume:

Sqoop deals with structured data but Hadoop is known for dealing with semi-structured and unstructured data.

Typically when we go to a website all the clicks and action on website are recorded and fed into a log file.

Flume can get that data from log file and convert into Hadoop compatible format.

Flume is basically a distributed service for collecting, aggregating and moving large amount of log data. Flume is also called log collector.

B) API (Application programming components)

Map-reduce programming is in java and is complicated. So Hive and Pig are two components for inducing map-reduce jobs in a more user friendly way.

Hive:

SQL is simple and business user, end users and data analyst understand SQL. Hive is like an API and can be written in SQL a known as HiveQL which can induce map-reduce jobs

Hive is basically a distributed datawarehouse infrastructure build on top of Hadoop for proving the big data analysis, summarization and query using HiveQL.

Pig:To make programming easier a high level programming language was created known as Pig Latin. Pig Latin uses English like language command filter and sort.

Pig is used for analysis of very large datasets that run on HDFS.

Pig has anlanguage layer which use the query language named Pig Latin and infrastructure layer consisting of compiler that produces a sequence of Map reduce programs based on Pig Latin command.

C) Data storage components

HBase:

It is an open-source, non-relational distributed database. It runs on top on top of HDFS.

It is basically a representative set for all No-SQL database which are possible for Hadoop. It is a columnar database where data is structured in columns instead of rows

So HBase is basically a distributed columnar database that uses HDFS for its data storage.

In HBase you can store data in extremely large tables with variable column structure.

D) Analysis components

Mahout:Mahout is machine learning component in open source world.

Mahout is a library of many machine learning statistical algorithms.

Mahout can help us write many machine learning algorithm and can be used for big data analysis.

It is mainly used by data scientist to do machine learning for statistical analysis.

Example: Amazon recommends you products as per your taste based on your past purchases

E) Workflow management

Oozie:

It is basically a Java based application and is responsible for scheduling jobs in Hadoop system. It is basically a workflow scheduler to run this job at this time.

In Hadoop eco-system you may write programs in Hive and Pig and you may want to run them one after the other on schedule basis which can be done Oozie

F) Administration and Co-ordination components

Ambari:

It is used by the administrator of the system. Hadoop is known for its multi-node cluster topology. Ambari has many Hadoop administrative tools for installing, maintaining and monitoring this Hadoop clusters.

For example if you want to add or remove a slave node in a cluster then it can be done by Ambari.

Hue:

Hue is also an administrative interface and has a GUI tool for issuing Hive and Pig queries, browsing files and developing Oozie workflows.

Zookeeper:

It is a mechanism used to perform co-ordination and synchronization between this Hadoop ecosystem tools and components.

It is used for centralized co-ordination of services such as naming, configuration and synchronization used by the various distributed application

Are you interested in learning Big Data Hadoop Online Training from Bangalore? Connect to Online IT Guru and get a Professional training on Big Data Hadoop Online Training Course from hyderabad.