Apache Oozie is an open source Java Web application to schedule Apache Hadoop Jobs in a distributed environment. It allows combine multiple complex jobs to be run in a sequential order to achieve a bigger task. With the sequence of the task two or more jobs can be programmed to run parallel to each other.

It is a work flow scheduler for Hadoop. Integrated with Hadoop Stack with Hadoop Stack with Yarn as its Architectural Center and support jobs for Apache Map Reduce, Apache PIG, and Apache Sqoop. It schedules Jobs specific to system like Java Programs or shell Scripts. Combines multiple jobs sequentially into one logical unit of work. It is responsible for triggering the work Flow actions. This in turn uses the Hadoop execution engine to actually execute the task. Dependencies between jobs were specified by the user in the form of Directed A cyclic Graphs. Oozie takes care of their execution in the correct order as specified in the work flow. Oozie is scalable, which can manage timely execution of thousands of workflows in Hadoop cluster.

Architecture :

It consists of two parts:

Work Flow engine: Its responsibility is to store and run workflows, composed of Hadoop Jobs Eg: Pig , hive and Map reduce.

Coordinator Engine: It runs work Flow jobs, based on the predefined schedules and availability of data.

Oozie is very much Flexible as well. One can easily start, stop and suspend jobs. Oozie has a flexibility of start, suspend, stop and rerun jobs. Oozie makes it very easy to rerun failed work flows. It is also possible to skip a specific failed node .

Working :

It runs as a service in the cluster and submit Work Flow definitions for immediate or later processing.

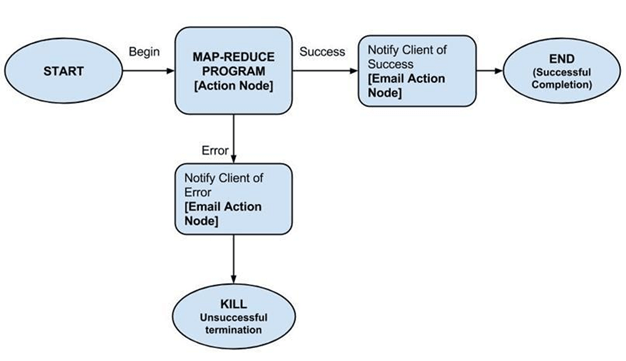

oozie Work Flow consists CONTROL FLOW NODES and ACTION NODES

Control Node:

It defines the beginning and end of the work Flow to control the work Flow execution Path.

A control Flow node controls the execution of Work Flow between actions. Most Important by allowing constructs like Conditional Logic where different branches, Consequently depending on the result of the earlier action node

Actions Node:

The action node performs the transfer of data, namely moving files into HDFS, PIG (or) Hive jobs, importing data Jobs using Sqoop or running a shell script.

Types of jobs :

There are three types of Jobs that are common in oozie

Oozie Work Flow Jobs: Therefore these are used to represent the sequence of jobs to be executed. It is represented as Directed Acyclic Graphs.

Oozie Coordinator jobs: In the same way these consists of work Flow jobs triggered by time and data availability.

Oozie bundle: Simultaneously It can referred as a package of multiple coordinator and work Flow jobs .

Features:

For example With the Client API and command line Interface, Equally important OOzie can used to launch, control and monitor the job to Java Application .

OOzie has provisions to execute jobs, which scheduled to run periodically.

Using its Web service API’s, one can control jobs from anywhere.

Oozie has provision to send email notifications, after the completion of jobs.

||{"title":"Master in Salesforce ","subTitle":"Salesforce Training by ITGURU's","btnTitle":"View Details","url":"https://onlineitguru.com/salesforce-training","boxType":"reg"}||

Advantages :

In the same fashion Oozie well integrated with Hadoop security. It most important in the Kerberized Cluster Moreover Oozie maintains a record of job submitted list containing which user submitted the job and launches all the actions with the proper privileges. For Instance It also handle all the authentications details for the user as well.

For example It is the only work flow manger with Built in Hadoop actions which makes workflow development, maintenance and trouble shooting easier. However In work Flow actions some systems would require significantly more work to correlate job tracker jobs .

In the background Oozie UI makes it easier to drill down to specific errors in the data nodes.

Recommended Audience :

Software developers

ETL developers

Project Managers

Team Lead’s

Business Analyst

Prerequisites :

There is nothing much prerequisite for learning Big Data Hadoop .Its good to have a knowledge on some Oops Concepts. But it not mandatory. Our Trainers will teach you if you don’t have a knowledge on those Oops Concepts

Useful Links: