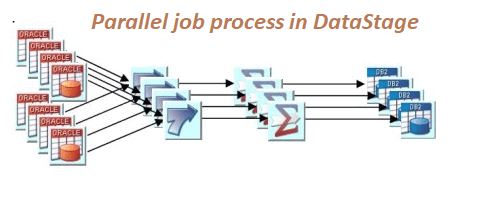

Datastage parallel job process is a program created in Datastage Designer using a GUI. It is monitored and executed by Datastage Director. The Datastage parallel job includes individual stages where each stage explains different processes. Datastage allows the users to store reusable components in the Datastage repository. It compiles into OSH and object code from C++ which makes it highly scalable and operational.

Overall, Datastage is a comprehensive ETL tool that offers end-to-end ERP solutions to its users. Moreover, Datastage offers great business analysis by providing quality data that helps in getting business intelligence. Many large business entities use this tool as an interface between their different systems and devices.

Generally, the job development process within the DataStage takes few steps from start to end. Here, I’ll brief you about the process.

At first, we need to import technical metadata that defines all sources, and destinations. For this purpose, an import tool within the Datastage Designer also can use.

Next, add all stages stating data extractions and loading of data (series file stages, datasets, file sets, DB connection stages, etc). Name change or rename the stages so they match the development naming standards.

Later, add the data modification stages (Like-transformers, lookups, aggregators, sorts, joins, etc.)

Specify the data flow from various sources to destinations by adding links.

Now, save and compile the job as it’s finished.

Finally, run/execute the job within the Designer or Directors.

Parallel jobs run in parallel on different nodes. Further, we will see the creation of a parallel job and its process in detail.

How to create a job in Datastage?

Datastage implements different processes in creating a job. During the starting phase of job creation, there exists a Parallel engine that performs various jobs. It starts the conductor process along with other processes including the monitor process. Next, the engine builds the plan for the execution of the job. Later, it verifies the schemas including input and output for every stage, and also verifies that the stage settings are valid or not. It also creates a copy of the job design. Further, it connects to the remote servers and starts the process of selecting the Leader process including the Conductor process. The SL process receives the execution job plan and creates different Player processes that further run the job. Moreover, the communication channels open between them to record the process.

In this way, after completing all the processes the DataStage starts the execution of the job.

Moreover, other different processing stages include the creation of a Datastage. These stages include the general stage, development stage, and processing stage, file stage, database stage, restructuring, data quality, real-time, and sequence stage.

Learn practically through DataStage Online Course regarding various stages of Datastage and their activities.

General Stage

This stage includes a link, a container, and annotation. Here, the link includes three different types of links such as a stream, lookup, and reference. It shows the data flow. The container is useful to share or kept privately. It helps to make the complex database design of the job easy to use. Moreover, the annotations are useful for adding floating descriptions on different jobs. It gives a way to understand the job along with ETL process documentation.

Development stage

The development stage includes a row generator, peek, column generator, sample, head, and a write range map. Each of the stage items is useful for the development or debugging of the database or data.

Here, the Row generator makes a duplicate data set that sticks to proper metadata. This is mostly useful in testing and data development. By using the column generator user can add more than one column to the data flow. Also, the user can produce test data for the column. Within Peek, the column values are recorded and the same a user can view in the director. Moreover, it includes a single input link with multiple output links.

The sample process under this stage helps to operate on input data sets. It has two modes of operating- percent and period mode. Here, the “Head” stage holds all the first “N” rows at every partition of data. It copies the same to an output data set from an input one. The ‘tail’ stage is similar to the head stage. In this, the last “n” rows are selected from each partition. The range map writes a form where a dataset is used through the range partition method.

||{"title":"Master in Datastage", "subTitle":"Datastage Certification Training by ITGURU's", "btnTitle":"View Details","url":"https://onlineitguru.com/datastage-online-training-placement.html","boxType":"demo","videoId":"CPv-ssSAbpo"}||

Processing stage

In this stage, the data is processed using various options. Here it includes;

- Aggregator: It helps to join data vertically from grouping incoming data streams. The data could be sorted out using two different methods such as hash table and pre-sort.

- FTP: It implies the files transfer protocol that transfers data to another remote system.

- Copy: It copies the whole input data to a single output flow.

- Filter records the requirement that doesn’t meet the relevance.

- Join relates the inputs according to the key column values.

- The funnel helps to covert different streams into a unique one.

- Lookup includes more than two key columns based on inputs but it could have many lookup tables with one source.

- Modify is the stage that changes the dataset record.

- Remove duplicate helps to remove all duplicate content and gives the relevant output as a single sorted dataset.

- The sort is useful to sort out input columns.

- The transformer is the validation stage of data, extracted data, etc.

- Change capture is the stage that captures the data before and after the input. Later it converts it into two different datasets.

- Compare is useful to make a search comparison between pre-sorted records.

- Encode includes the encoding of data using the encode command.

- Decode useful for decoding earlier encoded data.

- Compress helps to compress the dataset using GZIP.

- Moreover, there are many other parameters include such as Checksum, Difference, External filter, generic, switch, expand, pivot enterprise, etc.

File stage

This stage of the Datastage includes sequential file, data set, file set, lookup file set, and external source. The sequential file is useful to write data into many flat files by looking at data from another file. Similarly, the data set allows the user to see and write data into a file set. The file set includes the writing or reading data within the file set. These are useful to format data and readable by other applications. Moreover, the external source allows reading data from different source programs to output. Mostly it includes the filing of datasets and enables the user to read the files.

Database stage

The database stage includes ODBC enterprise, Oracle enterprise, Teradata, Sybase, SQL Server enterprise, Informix, DB2 UDB, and many more. These database stages include the writing and reading of the data that is included in the above databases.

Here, the Oracle enterprise permits data reading to the database in Oracle. The ODBC ent. permits looking into data and writing the same to the database. This is called the ODBC source. This is mainly useful in the data processing within MS Access and MS Excel/Spreadsheets. Moreover, the DB2/UDB ent. enables us to read and write data to the DB2 database. Similarly, Teradata also allows users to write, read, data to the Teradata database. It includes three different stages called a connector, enterprise, and multi-load. Thus, all the other databases also perform the same process as the above does.

Restructure Stage

This stage of restructuring in the Datastage Parallel job includes column imports and Column export, combine records, make a vector, promote sub-records, make sub-records, split-vector, etc. Every stage of this restructures stage serves different purposes. These used to support various rows, columns, and records and make some changes within it.

Here, using the Column export stage, we can export data to a single column of the data type string from various data type columns. The import stage of the column just acts opposite of the export. The “combine records” stage groups the rows that have the same keys. Make vector stage integrates specific vector to the columns vector. Moreover, promote sub-records provides support from the input sub-records to the top-level columns. The split-vector provides support to the fixed-length vector elements over the top-level columns.

Real-time stage

This stage also includes many functions such as;

- XML input helps to converts structural XML data into flat relational data.

- The XML output writes on the external structures of data.

- Further, the XML transformer converts the XML docs using a stylesheet.

- The Java Client stage useful as a target and lookup that includes three different public classes.

- Similarly, Java transformer helps in the links such as input, output, and rejection.

- Moreover, there are WISD inputs and WISD output. These are defined as Information Service Input and Output Stages respectively.

Data Quality Stage

Here it includes different stages like;

Investigate is the stage that predicts data modules of the respective columns of the records that exist in the source file. It offers different investigation methods too.

The match frequency stage obtains inputs from various sources such as from a file, from a database and helps to generate a data distribution report.

Moreover, MNS and WAVES represent Multinational Address Standardization and Worldwide Address verification and enhancement system respectively.

Sequence Stage

This stage consists of Job activity, terminator activity; sequencer, notification, and wait for file activity. Here, the job activity stage indicates the Datastage server to execute a job. The notification stage is useful for moving several emails by DataStage to the recipients mentioned by the client. The sequencer synchronizes the control flow of different actions while a job is in progress. Similarly, the terminator activity helps to shut down the entire progress whereas the wait for a file activity waits for emerging of an exact file. Moreover, it launches the dispensation or an exemption from rule also.

Furthermore, the parallelism in Datastage is achieved using the two methods- Pipeline parallelism and Partition parallelism.

Pipeline parallelism in Datastage performs transform, clean, and load processes in parallel. This stage of parallelism works like a conveyor belt moving from one end to another. Moreover, the downstream process begins while the upstream process continues working. This process helps in minimizing the risk usage for the staging area. Besides, it also minimizes the idle time held on the processors working.

||{"title":"Master in Datastage", "subTitle":"Datastage Certification Training by ITGURU's", "btnTitle":"View Details","url":"https://onlineitguru.com/datastage-online-training-placement.html","boxType":"reg"}||

In Partition parallelism, the incoming data stream gets divided into various subsets. These subsets further processed by individual processors. These subsets are called partitions and they are processed by the same operation process.

Further, there are some partitioning techniques that DataStage offers to partition the data. They are, Auto, DB2, Entire, Hash, Modulus, Random, Range, Same, etc.

Moreover, the DataStage features also include any to any, platform-independent, and node configuration other than the above. These features help DataStage to stand the most useful and powerful in the ETL market.

The above stages help in the processing of the Datastage parallel job.

Summing Up

Finally, it concludes with the details on how Datastage parallel job processing is done through various stages. The Datastage is a platform of ETL which helps in the data processing. It includes various data integration solutions that present data in the required form. Since it’s an ETL tool, it consists of various stages within processing a parallel job. To get practical knowledge of various stages and their relevance, DataStage Online Training will be useful. This learning will enhance skills and help to prosper in their usage in the actual work.